| Su | Mo | Tu | We | Th | Fr | Sa |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | 29 |

| 30 | 31 |

|

How to Influence Others at Work explores NLP techniques in the context of work. It was originally published in 1988. McCann was clearly influence by Bandler and by Milton Erickson (Erickson also influenced Bandler). The result is a short and engaging book, which certainly helps you remember that to be empathetic in your communications you need to match the communication style of your colleagues. Determining preferred means of communication - even if one does not necessarily completely believe in the psycho-verbal communication model - is undoubtedly a good idea. One concept that I found fascinating is the fact that the direction of eye movement reveals the type of remembering or thought construction that an individual is engaged in. When you are remembering - for most people - the eyes tend to drift to the person's left. When you are constructing thoughts - the eyes tend to drift to the person's right. Not everyone is the same in this - it depends on your handedness, for example. However, as Greg Hartley has described - you can use this basic observation to be more closely in synchronization with the person you are talking to. In addition to the information about communication models and the cues to people's recall and thinking processes the book talks extensively about pacing and provides specific instructions on improving your ability to empathetically pace colleagues. The book is now quite expensive at around 30 dollars - but I recommend it if you are interested in a practical, short book about improved communication at work.

|

This is a really good self-help book! Richard Brodie makes much of the fact that he went to Harvard (which he mentions on every other page) and the fact that he worked at Microsoft (this mentioned in one only one page in ten). However, the wisdom conveyed in the pages is impressive - and certainly worth the ten dollar price tag. This is a book that I recommend - if you are interested in improving the quality of your life. Some of the key elements are:

What is appealing about the book is the suspicion that it conveys that here is an author who had the chance to do what many would like to do: take time to evaluate his life, experiment with various regimes and strategies, select the best and then communicate that information to others. The reader imagines that Brodie had the opportunity to do this because he made some money from his time at Microsoft (I hope that was the case).

Here is some additional information. In order to establish your life purpose, consider the following questions:

When you have thought about your most important needs, create a Success Checklist which links needs, means and structures. For example, an engineer might have the following needs: (independence, control, recognition) linked to the means of (making money) in the structure of a (software engineering job) the same individual might have additional needs: (discovery, challenge, accomplishment) linked to the means of (solving technical problems) also in the structure of a (software engineering job).

These are interesting ideas which augment the GTD fascination with clearing the decks and charging through the lists, with attention to the why's and wherefore's.

|

Solving problems through diagrams is appealing and Lisa J. Scheinkopf's Thinking for a Change (not to be confused with Maxwell's Thinking for a Change), based on Eliyahu M. Goldratt's 'Thinking Processes', sets out a variety of diagramming methods focused on problem solving and creating change. The book is good but strangely organized. An abstract Part 1 explains the terms used in the Thinking Process diagrams and the general ideas that underlie the processes. A disjointed Part 2 illustrates the thinking processes in an order of presentation that may confuse the reader. Here the Transition Tree - that seeks plan out the activities needed to effect a change is presented first. This is followed by the Future Reality Tree, used to set objectives, and then the Current Reality Tree, used to identify the changes that need to be made. The reader could make the case that these chapters are in reverse order - and they are followed by a discussion of the Evaporating Cloud - which is the best known and most accessible Thinking Process, and then an overview of all of the Thinking processes applied in force, which emphasizes an entirely different ordering. Why Lisa elected to present her material in this order is not clear - my theory is that she is attempting to evangelize the less well known Thinking Processes - and therefore introduces them early in order to give them exposure. Aside from this - I was at a slight loss over the structure and intention of the book. The book has other failings: it is expensive at sixty dollars and its diagrams are often sprawling. The diagrams of the overall processes, for example, are grown in fractal fashion before the reader over several pages, each successive page shrinking a former part of the diagram and adding a new section. This diagram growing is not made clear to the reader - who is left to deduce what is happening to the diagram by flicking back and forth between the pages. It would have been better to show the entire diagram and then described the various parts.

Despite the failings in overall organization and the diagrams - the book is valuable and I recommend it to those interested in studying Goldratt's Thinking Processes in detail. The Evaporating Cloud diagram commonly provides insights when I use it to analyze problems and concerns and if you have not looked at it before - I would suggest that you take a look at this relatively simple construction - as it will certainly enable you to understand and resolve many conflicts - and at least develop additional perspectives that may be helpful to you. Several web site contain examples of Evaporating Clouds (see, for example, http://en.wikipedia.org/wiki/Evaporating_cloud). Thinking for a Change takes the analysis of Thinking Processes beyond what I have encountered on the web and is intended for the expert Thinking Processes person, I believe. I intend to apply myself to the material - but I should have liked a book that was easier to digest - and was perhaps shorter. Perhaps Lisa will create a second version which is simpler and more accessible than the first.

Here is an interesting site which provides additional information on the thinking processes and methods: http://www.dbrmfg.co.nz - it is well worth a visit.

|

Charles W. McCoy is a judge and therefore necessarily a careful and methodical thinker, one would hope. The opening of this book takes you through a case from McCoy's court - a seemingly open and shut case of a promising career cut short by industrial carelessness and corner cutting. McCoy modestly shows how even a judge can be taken in by the superficial and how, in this case, careful and sceptical consideration of the facts enabled the correct outcome to be achieved. However, this was a close call - and McCoy's response to this incident, and many others in a distinghished legal career, has been to set down the basics of thinking issues through carefully. I found this an interesting read. Perhaps too much focus on optical illusions and what seemed like standard examples of critical thinking exercises - but interesting and challenging in its approach. If you are interested in sharpening your thinking skills - this is a recommended read.

|

Emotions and feelings are difficult to understand - for everyone - and for me in particular! Some feelings are especially hard to understand - hence dedicated books. In reading through you will discover many things. Alixithymia, for instance, a condition by which people can be 'feeling numb' - able to be aware of feelings - but not be connected to them. Stumbling on Happiness takes you through a detailed analysis of feelings, awareness and experience - and provides the customary selection of experiments involving folk wandering around universities worldwide - not all of whom were paid - but all of whom interact with people asking for directions, looking glamorous on dangerous bridges, and so forth, with the customary selfless dedication of those committed to the ways of learning.

The conclusions are interesting - and like the non-academic 'You Version 2.0' will help you to understand the stack of capabilities and functions (and frequent miss-wirings between them) that constitute You. This is not a self-help book - it is a book by a psychologist (a specialized human animal) written with the general understanding that psychology is a valid science (despite its lack of electricity) with a solid emphasis on overviewing human psychology and injecting humour in the process.

Interestingly, I observed the card trick of 'Why Didn't I Think of That' early on in this book - and naturally was not taken in by that a second time. (Actually, I think that I saw through the trick the first time around, too).

As discussed in elsewhere on this site, the VCD format allows you to play videos and movies on your DVD player using normal CD-R media. One thing that you might want to do is create a continuous loop VCD - you can use that to give yourself a constant background of favorite material from YouTube, create a looping product demonstration for a show or for a store, or even turn your television into a fishtank - using a movie or two of aquatic life. The VCD format can accommodate menus and considerable complexity. All the information that you need to understand VCD menus is [5]here on PCByPaul and [6]here (in detail). However, if you want to create a continuous loop playing video using VCD - you just need to do the following. Start with two flash movies, say video1.flv and video2.flv. First, create the necessary VCD format mpg files:

ffmpeg.exe -i video1.flv -target ntsc-vcd -ac 2 video1.mpg ffmpeg.exe -i video2.flv -target ntsc-vcd -ac 2 video2.mpg

Now you need to create a template xml file to control vcdimager's production of your movie. You use:

vcdxgen -t vcd2 video1.mpg video1.mpg

...to do that.

This command creates a file called videocd.xml. You need to change this slightly to create the looping effect. To do this, remove the <playlist> portions in the pbc section in the file. Replace these with <selection> items, as follows:

<selection id="lid-000">

<next ref="lid-001"/>

<timeout ref="lid-001"/>

<wait>1</wait>

<play-item ref="sequence-00"/>

</selection>

<selection id="lid-001">

<next ref="lid-000"/>

<timeout ref="lid-000"/>

<wait>1</wait>

<play-item ref="sequence-01"/>

</selection>

Take a look at the changes and you will see how the looping effect is achieved. When the first section times out, it moves to 'lid-001' (the next section). When the second section times out, it moves to 'lid-000' (the first section) - and so on.

Once you have updated videocd.xml, you can use it to create the image to be burned to cd with:

vcdxbuild videocd.xml

And then finally burn the VCD with:

cdrdao write --device 1,0,0 --driver generic-mmc-raw --force --speed 4 videocd.cue

Now you can create some nice endless, atmospheric video backgrounds - and display them on your large screen television. If you need more than 2 movies - just create the appropriate number of <selection> sections and make sure that the last one times out to the first.

|

Influence is an influential book - and it has permeated high-tech. For example, Joel Spolsky referred to it in a post on the influence that Microsoft were attempting to secure in the great Vista laptop blogger giveaway (Bribing Bloggers). A very short summary of the principles of influence follows:

If you have ever marvelled at the ability of everyone around you to spend inordinate amounts of money on 'plush' dolls, trolls or manufactured pop idols - this is a useful and explanatory book. The utility of the book is in exposing the strands of attack of the influencer - these are normally fairly obvious - but it is interesting to have the examples and the categorizations that Cialdini provides. It is also troubling to read of the potential consequences of influence in its most extreme and dire manifestations.

|

A short read. This book(let) reminds you of the criticality of not damaging the ego of another person. Excellent advice - Dale Carnegie-like - but difficult to apply in practice - because it goes against our naturally selfish tendancies. Here is the basic structure of the book.

Here is a short script which goes through a directory structure and operates on mp3 or MP3 files. It uses standard bash methods - and I am posting it here in case you are interested - you can achieve the same effect with:

find . -name "*.[mM][pP]3" -print

...but this script is more fun - and substantially slower - giving you time to think about other things - which is often useful. The script prints out the names of the files it encounters - you could have it do something else - like report checksums (cksum) or modification times (ls -lt). The necessary lines for these operations have been commented out in the script. You can use this script as a template to carry out other file based scripted operations. Also note that it won't find files with the following cases in their extensions: mP3 or Mp3.

#!/bin/bash

scandir () {

for item in *

do

if [ -f "$item" ] ; then

curdir=`pwd`

nfile=`expr $nfile + 1`

EXT=`echo "$item" | rev | cut -c 1-3 | rev`

if [ $EXT = "mp3" -o $EXT = "MP3" ]

then

echo "$curdir"/"$item" | cut -c${ld1}-

fi

elif [ -d "$item" ] ; then

cd "$item"

scandir

ndirs=`expr $ndirs + 1`

cd ..

fi

done

}

startdir=`pwd`

ld1=`echo $startdir | wc -c`

ld1=`expr $ld1 + 1`

echo "Initial directory = $startdir"

ndirs=0

nfile=0

scandir

echo "Total directories searched = $ndirs"

echo "Total files = $nfile"

Do you want to make a VCD that will display photographs on your DVD player? The following recipe is not elaborate. It doesn't deal with a sound track or with transition effects between images. However, if you want to share photographs using a DVD player and a television - this method is effective and - this is the simplest method that I could find. If you have suggestions for improvements, please let me know!

First, prepare a set of jpg files. It is convenient to do this with sequentially named files - because each jpg file will be turned into an mpg file, and the mpg files will be included in the VCD. Here is a short script which will name your jpg files IMG0001.jpg, IMG0002.jpg, etc. This script renames your existing jpg files in this directory (so work with copies of your photographs).

#!/bin/sh count=1 BASE=IMG for file in `ls -d *.jpg | sort -r` do echo $file count=`expr $count + 1` PADDED=`printf "%04d" $count` name=$BASE$PADDED".jpg" mv -i $file $name done

Having named the jpg files in a convenient way - we then need to insure that they are appropriately scaled. Again a short script comes in handy.

#!/bin/sh for file in *.jpg do echo $file jpegtopnm $file | pnmscale -xysize 768 576 > tmp.pnm pnmtojpeg tmp.pnm > `basename $file .jpg`.scaled.jpg done

Now your jpg files are appropriately named, and appropriately sized, the next step is to create a matching set of short mpg files. To do this we will make use of ffmpeg. Here is the script to achieve this.

#!/bin/sh

for file in IMG*.scaled.jpg

do

echo $file

count=0

while true

do

count=`expr $count + 1`

if [ $count -eq 21 ]

then

break

fi

cp $file tmp$count.jpg

done

ffmpeg -f image2 -i tmp%d.jpg -target ntsc-vcd `basename $file .test.jpg`.mpg

done

Now you need to create the 'selection' segments for the xml file that we will use to create the VCD image. Another script is needed. Note that here the number of images is 19 in the example that I used, and this means that there is an '18' in the script - you will probably need to change this for your files.

#!/bin/sh

#NB one less that the number of mpg's to be

#included as the vcd xml is zero based

max=18

count=0

while true

do

thissec=`printf "%03d" $count`

countp1=`expr $count + 1`

nextlid=`printf "%03d" $countp1`

sequeid=`printf "%02d" $count`

if [ $count -eq $max ]

then

nextlid="000"

fi

cat <<!

<selection id="lid-$thissec">

<next ref="lid-$nextlid"/>

<timeout ref="lid-$nextlid"/>

<wait>5</wait>

<play-item ref="sequence-$sequeid"/>

</selection>

!

count=`expr $count + 1`

if [ $count -gt $max ]

then

break

fi

done

This script will write to standard out a revised set of <selection> items to put into the xml file needed to create the VCD. Execute the command, capture its output to a file, and edit this file into the videocd.xml file created with the following command.

vcdxgen -t vcd2 IMG0002.mpg IMG0003.mpg

(include all the mpg files on the command line). Edit the selection sections into the resulting videocd.xml file - in the place of the playlist sections. Now you can create the VCD image and cue file with:

vcdxbuild videocd.xml

...and burn the resulting image with:

cdrdao write --device 1,0,0 --driver generic-mmc-raw --force --speed 4 videocd.cue

As noted in the introduction, this is not a fancy approach - but it makes nice simple photograph VCDs. In the future I may create a single script which does the work - and improve its error checking.

If you have recently added to your mp3 or jpg library and want to backup just new additions, here is the command to create a tar archive of files modified in the last 2 days.

find . -mtime -2 -print | sed -r "s/([^a-zA-Z0-9])/\\\\\1/g" | xargs tar -rvf new.tar

The find command finds the files, the sed command escapes non-ascii characters, the xarg command fires up tar commands which append to the tar archive called 'new.tar'. The find option '-mtime -2' means files that have been modified in the last day days.

|

Smart Choices - A Practical Guide to Making Better Decisions by John S. Hammond, Ralph L. Keeney, Howard Raiffa

In contrast to 'Thinking For A Change', which tackles a broader subject, but winds up focused on decisions, I found this an extremely interesting read. It took me a while to get used to the style, which is verbose, though this is a short book. My initial resistance to the style was based on the fact that I have seen other, more academic books on this subject (e.g. Strategic Decision Making: Multiobjective Decision Analysis with Spreadsheets by Craig W. Kirkwood) which are quickly mathematical and algorithmic. In this case the lack of overt math and algorithm was an initial disappointment. However, once I succumbed to the style, I found the information both interesting and informative - the book helped me understand the processes of decision making better. The authors take you through many different descisions in the text - all the while documenting the decision method which can be characterized as "PrOACT" and "URL". PrOACT stands for Problem, Objective, Alternatives, Consequences, Tradeoffs - prior to reading the book I would have suspected that applying this type of breakdown to many decisions would be overkill. However, as the authors frequently mention, not all decisions require the 'full treatment' and the clarity of the steps in PrOACT does provide confidence in the application in practical situations. Here is a short summary of 'Smart Choices':

(URL stands for Uncertainty, Risk and Linked decisions - as the authors probably are not interested in blogging - the acronym URL is not used in the book). That the dominant alternative and 'Even-Swap' methods reduce the complexity of alternative analysis was appealing too. There are many interesting resources on decision making on the web see the DOE decision making guidebook, for example. However, this books is worth the investment. A recommended purchase! Perhaps I am now ready (again) to tackle Craig Kirkwood's book.

|

A book focused on Bourne shell scripting. This has some real world examples - but unfortunately it generally fails to be thoroughly interesting or as absorbing as it promises to be. The major failings are the length of the book - it is just too long to be assimilatable, its academic examples, and its lack of structure. It is not completely clear as you read through who the intended audience or audiences are. It is not clear where one might apply the examples that given.

The classical books on software technologies generate stopping off points where the reader is forced to consider immediately implementing what is described in his or her day to day work, either as a tool or an enhancement to process or design. This book did not generate these stopping off points for me. Instead I was occasionally fretting that there might be another way to do what had just been described. This does not happen when one reads Bentley, Kernighan, Wall et al.

So I give it just 2/5. I would only buy it if you are interested in surveying all the works on scripting, it is required reading for a course, or if for some reason the example chapter available online particularly appeals to you.

|

A collection of short articles concerning the everyday with a scientific bias, which stem from observations and answers supplied by correspondents around the world to the New Scientist magazine.

It is interesting stuff: the fastest way to pour fluid from a bottle, the optimal order in which to combine beer and lemonade, and the feasible return radius of lost bees from their hives, are examples of the material covered.

Interesting, but perhaps not immediately practical and therefore constantly at risk of being as useless as fiction. But it is an enjoyable read and could be useful if your mission were to inspire curiosity in colleagues or a class of iPod-ed teenagers.

It seems that I am constantly fighting a lack of disk space. One reason for this is that I am making increased use of virtual machines, so I store a complete image of a machine on another machine's hard disk. This gets through disk space rapidly.

The other problem that I seem to face is that operating system and 'office' program updates regularly exceed a gigabyte. This rapidly eats through whatever diskspace I arrange to have free.

One tool to fight against wasted space is 'rdfind'. This is an efficiently written duplicate file finder. It is intended for dealing with backups where several dumps of a machine or set of machines are being managed and removing file duplication saves time and space.

rdfind uses various tricks to reduce the complexity of comparing every file to every other file in its search path. It uses file size as an initial check, it uses the first few bytes in the file as secondary check, and so on.

Here is a typical command line to find (but not do anything about in this case) local file duplicates. This will create a file called 'results.txt' in the directory in which you run rdfind describing what rdfind uncovers.

rdfind -n true ./

rdfind also has various options for removing duplicates, or trimming the files on various backup disks, or replacing duplicates with links.

To install rdfind, simply download the source, build, and install the program. Thank you Paul Dreik!

If you want to convert ascii into PDF - and you want to do it with a little style, use the following:

a2ps -media=Letter -o - example.txt | ps2pdf - example.pdf

Well, I found this slightly unintuitive. If you want rsync to use a specific non-standard port, and you don't have the rsync daemon running, you need to force ssh to use this port, like so:

rsync -ar --partial --progress --rsh='ssh -p 12345' username@xyz.abc.com:/path/to/your/files .

(or you are going to get errors referring to port 22 despite your best efforts to avoid this port with --port=12345 or similar attempts at the appropriate argument which rsync does not actually support).

|

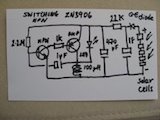

Are you interested in about making an electronic device which does something (slightly) useful, doesn't require an external power supply, and might last longer than you? I found this proposition enticing, and so, inspired by Kevin Horton's Infini-Flasher, I had a go at making my own version of Kevin's cunning device.

I firstly put together the components using a simple breadboard and found that I needed to make some changes to the component values listed in Kevin's design. I suspect that this is simply a result of using different transistor types. (This type of oscillator is fussy).

The changes simplified Kevin's circuit slightly, I omitted a pair of diodes, which are only needed if the supply voltage is high (I think), and changed the ordering of the NPN and PNP transistors. I also found that it was important to use a suitable value for the limiting resistor, between the super capacitor and the flasher circuit. I started out with 100k here, as in Kevin's circuit, but found that the circuit would not start to flash as the leakage through the flasher circuit was too high to allow the flasher circuit to reach a high enough value to actually start flashing. Clearly the circuit is a little sensitive to component tolerances. I recommend that you put together your circuit using a breadboard first, then solder everything together once you are sure that the component values are satisfactory.

This oscillator circuit works well for this application, as we want a very short duration of current usage, so that the relatively small amount of charge available from the capacitors lasts as long as possible. A simulation of the circuit shows that the on condition for the output transistor is very brief. This provides a brief spike of power to the inductor and in turn flashes the LED. As human eyes are very sensitive to short pulses of light (through having evolved to avoid the glinting teeth of sabre tooth tigers) this provides the most electrically economical means to light the LED. Even if the pulses were longer, the human eye would not appreciate the large expenditure of power that much.

Here (below) is the circuit diagram. Be warned that this battery free flasher is fascinating! The super capacitor (1F on the diagram) charges up from the solar solar cells in about 30 minutes under a lamp, or quite happily during the hours of daylight on a desk. When the voltage supplied to the flasher circuit reaches about 1.5 volts, the LED starts flashing. The current consumption is around 10 microamps, so the charge in the super capacitor lasts a long time, certainly more than a typical night time. So far my version has been flashing away happily on my desk for about a month. It should last as long as the electrolytic capacitors that it contains - that should be at least 20 years - or perhaps longer...

|

I changed work computers recently and on my new computer I needed to convert 16 CDs to .mp3 format. I didn't want to install new programs in the process, I just wanted to painlessly carry out the conversion. Putting the first CD into the drive led to Windows Media Player being launched and offering to 'Copy from CD' - so I accepted that offer.

After a little whirring, what I ended up with was a new 'Unknown Artist', and the content of the CDs as '.wma' files under "My Documents\My Music". Well, not exactly perfect because I prefer .mp3 files (because these are the most likely to work in any given mp3 player). Looking around at the options in Windows Media Player interface indicated that Microsoft were being typically unhelpful in not allowing users to store their music in formats other than .wma. However, using the various tools added to this machine's cygwin to deal with video, etc., I found it was easy to carry out the conversion. The conversion is a little slow, so rather than navigate all those silly directory names (containing irritating spaces...) I wrote a short script which traverses the "My Music" folder and converts .wma files to their more useful .mp3 cousins. Here is the script.

#!/bin/sh

scandir () {

for item in *

do

if [ -f "$item" ] ; then

curdir=`pwd`

nfile=`expr $nfile + 1`

EXT=`echo "$item" | rev | cut -c 1-3 | rev`

if [ $EXT = "wma" ]

then

ld2=`echo "$curdir"/"$item" | wc -c`

ld2=`expr $ld2 - 5`

echo "Operating on:"

echo "$curdir"/"$item"

NEWNAME=`echo "$curdir"/"$item" | cut -c-${ld2}`.mp3

echo "Will create:"

echo $NEWNAME

if [ -f "$NEWNAME" ]

then

echo "$NEWNAME exists - no action taken"

else

ffmpeg -i "$curdir"/"$item" tmp.wav

lame -h tmp.wav "$NEWNAME"

rm -f tmp.wav

fi

fi

elif [ -d "$item" ] ; then

cd "$item"

scandir

ndirs=`expr $ndirs + 1`

cd ..

fi

done

}

startdir=`pwd`

ld1=`echo $startdir | wc -c`

ld1=`expr $ld1 + 1`

echo "Initial directory = $startdir"

ndirs=0

nfile=0

scandir

echo "Total directories searched = $ndirs"

echo "Total files = $nfile"

How do you set about saving your many and varied jpg and mp3 files from an old machine?

There are a variety of possible strategies. Assuming we neglect those that presume that you have your files in a carefully organized directory structure, or a nice secure backup, here is a pragmatic approach.

First - make sure that you are using Linux, Mac OS X, or Cygwin on Windows. Then use find to collect a list of the files and their complete files names that you wish to save. For example, if you wanted to save all files with the suffix 'mp3', you would use:

find . -name "*.[mM][pP]3" -print > mp3files.txt

That will give you a list of mp3 flies in the text file 'mp3files.txt'. Then go over to the machine that you wish to copy the files to, and use a command like this to collect the files on the other machine:

ssh User@1.2.3.4 "cd /cygdrive/c; tar -T mp3files.txt -cvf -" | tar xvof -

(With suitable replacesments for the username, the address of the remote machine, and the directory to change directory to on that machine). This command executes an ssh command to the machine that has the files, goes to the root directory, then tar's the files to standard out, making use of the mp3files.txt list. Meanwhile back on the receiving machine, tar reads standard in and extracts the files. Hence you create a faithful copy of the files and directory structure on the receiving machine.

Why didn't I use rsync, you might inquire. Well - I tried and I found that rsync, on this particular version of Cygwin had a habit of hanging. Meanwhile tar and ssh do the job just fine. This method has advantages too. For example, if you want, you can remove files that you do not want to copy from mp3files.txt prior to doing the copy. So you have a high degree of control over what gets copied and what gets left behind.

Here is a recipe for concatenating mpg and other video format files.

ffmpeg -i a.mpg -s 480x360 -maxrate 2500k -bufsize 4000k -b 700k -ar 44100 i1.mpg ffmpeg -i b.mpg -s 480x360 -maxrate 2500k -bufsize 4000k -b 700k -ar 44100 i2.mpg ffmpeg -i c.mpg -s 480x360 -maxrate 2500k -bufsize 4000k -b 700k -ar 44100 i3.mpg cat i1.mpg i2.mpg i3.mpg > a.mpg ffmpeg -i a.mpg -sameq combined.mpg

This seems to work better than simply using 'sameq' throughout.

Here is another ffmpeg recipe. This time extracting the audio portion of a flash, or .flv, video file.

ffmpeg -i example.flv -ab 56 -ar 22050 -b 500 example.wav lame --preset standard example.wav example.mp3

You can also go directly to .mp3 from .flv using ffmpeg with a command like this.

ffmpeg -i example.flv -sameq example.mp3

But using lame seemed to give better results, as far as I could tell.

I was interested in video monitoring a room recently, and decided to use the built-in camera in a relatively elderly MacBook for this purpose. The procedure was extremely simple:

1. Display a video image from the camera on the screen using a suitable program

2. Run the script below, which saves a complete screen capture, compares it with the previous capture, and saves the image if there are significant differences

3. Turn off the display with F1

For additional impact, I also arranged for the MacBook to mail me updates - so if there is motion in the monitored area I receive an email notification. Because the entire screen is captured, each image is date stamped. (The email messages are also given the current date and time as a subject line). Here is the script:

#!/bin/sh

THRESHOLD=30

DELAY=5

count=0

while true

do

/usr/sbin/screencapture -m -tjpg -x /Users/username/Desktop/pictures/new.jpg

if [ ! -f old.jpg ]

then

cp new.jpg old.jpg

fi

/usr/local/bin/djpeg -ppm new.jpg > new.ppm

/usr/local/bin/djpeg -ppm old.jpg > old.ppm

~/bin/pnmpsnr new.ppm old.ppm > yup 2>&1

Y=`awk '/Y color/{print int($5)}' yup`

echo "Y is $Y"

if [ $Y -lt $THRESHOLD ]

then

count=`expr $count + 1`

name=`echo $count | awk '{printf "%06d.jpg", $1}'`

echo "Saving: " $name

cp new.jpg $name

uuencode $name $name > t

SUBJECT=`date`

EMAIL="xyz@abchlk.com"

/usr/bin/mail -s "$SUBJECT" "$EMAIL" < t

else

echo "No significant change in the images"

fi

cp new.jpg old.jpg

sleep $DELAY

done

I drew inspiration for a script from David Bowler's page. Thank you David! There are a few things that you need to do to get this work correctly. Firstly, you need to be able compile djpeg and netpbm. Apple make building open source software a massive pain (I wonder why, largest company in the world?!). Anyway, these things can be done fairly easily - once you've worked out why you need to download several gigabytes of the Xcode environment. Anyway, Xcode is needed, just for the gcc compiler. How Apple get away with making an open source compiler their own, and making it so difficult to install I don't know. But fortunately, they have paid off the right politicians, so no major anti-trust cases for Apple, just yet. djpeg is needed to turn the jpg screen capture into a ppm file. Just grab the source from here, and configure and make it using your newly acquired gcc compiler.

netpbms is needed for the utility which compares two ppm files, called pnmpsnr. I grabbed the source, and built it using make and gcc as usual. The build was not entirely error free (!) but pnmpsnr was built just fine. This is just a program that reads and processes ascii files, so it isn't exactly the most demanding software engineering activity.

After upgrading my Cygwin installation (which fixes some annoying socket problems for me) one residual problem was the display of certain characters in man pages. As this problem seemed to effect the emboldening of options like '-d', this made man pages virtually unusable. So, I then found, relatively empirically, that this can be fixed by adding the following lines to your ~/.profile file:

LANG=ISO-8859-1 export LANG

If you make this update, and open a new window, you should find that man pages return to their former, readable, glory.

I forgot that OS X's find command is not the Gnu find command. So, the find command used by the DirMerge.sh script needs a tweak to run on OS X. Here is that tweak.

#!/bin/sh

awk '

BEGIN{

dir1="\"" ARGV[1] "\"/"

dir2="\"" ARGV[2] "\"/"

readdir(dir1, lista, typea)

readdir(dir2, listb, typeb)

for(filea in lista){

if(filea in listb){

if(typea[filea] == "f"){

if(ckfile(dir1 "\"" filea "\"") != ckfile(dir2 "\"" filea "\"")){

print "# " dir1 "\"" filea "\"" " " dir2 "\"" filea "\""

print "# WARNING FILES DIFFER - CONTINUING - YOU NEED TO CHECK WHY!"

} else {

com[++ncom]="# files match " dir1 "\"" filea "\"" " " \

dir2 "\"" filea "\""

com[++ncom]="/usr/bin/rm " dir1 "\"" filea "\""

}

}

}else{

if(typea[filea] == "d" ){

dcom[++ndcom]="# directory needs to be created in the target"

dcom[++ndcom]="mkdir -p " substr(dir2,1,length(dir2)-2) \

substr(filea,2) "\""

} else {

com[++ncom]="# file needs to be moved to the target"

com[++ncom]="mv " substr(dir1,1,length(dir1)-2) substr(filea,2) \

"\"" " " substr(dir2,1,length(dir2)-2) substr(filea,2) "\""

}

}

}

if(!ncom && !ndcom){

print "No updates required"

exit

}

print "The following commands are needed to merge directories:"

for(i=1;i<=ndcom;i++){

print dcom[i]

}

for(i=1;i<=ncom;i++){

print com[i]

}

print "Do you want to execute these commands?"

getline ans < "/dev/tty"

if( ans == "y" || ans == "Y"){

for(i=1;i<=ndcom;i++){

print "Executing: " dcom[i]

escapefilename(dcom[i])

system(dcom[i])

close (dcom[i])

}

for(i=1;i<=ncom;i++){

print "Executing: " com[i]

escapefilename(com[i])

system(com[i])

close (com[i])

}

}

}

function ckfile(filename, cmd)

{

if (length(ck[filename])==0){

cmd="cksum " filename

cmd | getline ckout

close(cmd)

split(ckout, array," ")

ck[filename]=array[1]

}

return ck[filename]

}

function escapefilename(name){

gsub("\\$", "\\$", name) # deal with dollars in filename

gsub("\\(", "\\(", name) # and parentheses

gsub("\\)", "\\)", name)

}

# OS X does not have the -ls option for find, so work around this using stat

function readdir(dir, list, type, size, timestamp, ftype, fsize, name){

cmd="cd " dir ";find . -exec stat -r {} \\;"

print "Building list of files in: " dir

while (cmd | getline > 0){

timestamp=$10

if(substr($3,1,2)=="01"){

ftype="f"

} else if (substr($3,1,2)=="04") {

ftype="d"

} else {

ftype="unknown"

}

fsize=$8

for(i=1;i<=15;i++){

$(i)=""

}

name=substr($0,16)

if(name==".")continue

list[name]=int(timestamp)

type[name]=ftype

size[name]=fsize

}

close(cmd)

}' "$1" "$2"

When working on multiple machines, using external drives, and being constrained for disk space, it is all too easy to create cloned directory trees, which are similar but not identical to one another. Looking at the various directories cloned on my hard drive, I decided to create a simple script for merging directories, which is appended. Now, this is very simple, and be warned, it comes with no guarantees expressed or implied, and has minimal error checking. However, I find it useful and thought I would post it in case anyone else were interested.

Here is how it works...

1. Two arguments, the source directory, and the target directory are passed to the awk program

2. The awk program cd's to the source and target directories and builds associative arrays keyed on file names for the file's timestamp and file type (either file or directory)

3. Each file in the source directory is checked in the target directory.

4. If the same file name exists in the target, the checksums of each file are compared, if the files are identical, a command to delete the source file is stored

5. If the files are not identical, a warning is emitted and the file is left in place in the source directory for further investigation

6. If the source file or directory does not exist in the target it is moved to the target directory, again by storing the appropriate command

7. The user is shown the list of commands that the script has decided are required and asked if these should be executed

8. If requested, the merge commands are executed The effect is that identical files are deleted in the source (you already have them in the target after all). Files that are unique are copied to the target. Any files that are in conflict are left in place to be reconciled by hand.

As mentioned above - the script is crude and contains minimal error checking - use at your own risk!

#!/bin/sh

awk '

BEGIN{

dir1="\"" ARGV[1] "\"/"

dir2="\"" ARGV[2] "\"/"

readdir(dir1, lista, typea)

readdir(dir2, listb, typeb)

for(filea in lista){

if(filea in listb){

if(typea[filea] == "f"){

if(ckfile(dir1 "\"" filea "\"") != ckfile(dir2 "\"" filea "\"")){

print "# " dir1 "\"" filea "\"" " " dir2 "\"" filea "\""

print "# WARNING FILES DIFFER - CONTINUING - YOU NEED TO CHECK WHY!"

} else {

com[++ncom]="# files match " dir1 "\"" filea "\"" " " \

dir2 "\"" filea "\""

com[++ncom]="/usr/bin/rm " dir1 "\"" filea "\""

}

}

}else{

if(typea[filea] == "d" ){

dcom[++ndcom]="# directory needs to be created in the target"

dcom[++ndcom]="mkdir -p " substr(dir2,1,length(dir2)-2) \

substr(filea,2) "\""

} else {

com[++ncom]="# file needs to be moved to the target"

com[++ncom]="mv " substr(dir1,1,length(dir1)-2) substr(filea,2) \

"\"" " " substr(dir2,1,length(dir2)-2) substr(filea,2) "\""

}

}

}

if(!ncom && !ndcom){

print "No updates required"

exit

}

print "The following commands are needed to merge directories:"

for(i=1;i<=ndcom;i++){

print dcom[i]

}

for(i=1;i<=ncom;i++){

print com[i]

}

print "Do you want to execute these commands?"

getline ans < "/dev/tty"

if( ans == "y" || ans == "Y"){

for(i=1;i<=ndcom;i++){

print "Executing: " dcom[i]

escapefilename(dcom[i])

system(dcom[i])

close (dcom[i])

}

for(i=1;i<=ncom;i++){

print "Executing: " com[i]

escapefilename(com[i])

system(com[i])

close (com[i])

}

}

}

function ckfile(filename, cmd)

{

if (length(ck[filename])==0){

cmd="cksum " filename

cmd | getline ckout

close(cmd)

split(ckout, array," ")

ck[filename]=array[1]

}

return ck[filename]

}

function escapefilename(name){

gsub("\\$", "\\$", name) # deal with dollars in filename

gsub("\\(", "\\(", name) # and parentheses

gsub("\\)", "\\)", name)

}

function readdir(dir, list, type, timestamp, ftype, name){

cmd="cd " dir ";find . -printf \"%T@\\t%y\\t%p\\n\""

print "Building list of files in: " dir

while (cmd | getline > 0){

timestamp=$1

ftype=$2

$1=$2=""

name=substr($0,3)

list[name]=int(timestamp)

type[name]=ftype

}

close(cmd)

}' "$1" "$2"

If you want to backup a directory to a remote computer - but don't want to drag down your network's bandwidth you can use the --bwlimit option...

cd directory rsync --rsh='ssh -p 12345' --bwlimit 20 -avrzogtp . username@remote.servername.com:/users/username/directory | tee -a backup.txt

Here is a useful command which allows you to copy a file from a server to your machine or vice versa. Instead of using scp it uses rsync. The advantage of rsync is that if your connection breaks during the transfer, and you are left with a partial file, when you restart the command, rsync will pick up from where it left off. And this, of course, will save you time.

rsync --partial --progress --rsh=ssh user@yourserver.xyz.com:/home/path/to/file.zip .

If you have a PDF file and want to send only a portion to a friend or colleague, what do you do? With pdftk you can easily create subsets of the pages in a PDF. For example, if you want to drop 5 pages of preamble in a document that you need to send to your boss, you can do that with:

pdftk A=LongDocument.pdf cat A6-end output ShortDocument.pdf

Having a look through files on a Windows XP machine recently, I found two irritating files that I could not delete. Windows XP happily gave me a baffling 'Cannot delete file: Cannot read from source file or disk.' message box. (As helpful as usual). The solution to this problem is to prepend \\? to the file name that you are about to delete (using cmd.exe, of course, there is no known solution from the graphical interface). E.g.:

del "\\?\C:\Documents and Settings\User\Desktop\FOIA\documents\yamal\flxlist."

The magic \\? switches off some form of Windows XP file name sanity checking, allowing you to delete files which Windows XP thinks do not have valid names (although, of course, Windows XP did allow the creation of the files in the first place). Anyway, problem solved!